[notes by Erik T.]

The following lecture is based on W. H. Zurek, “Decoherence and the transition from quantum to classical – revisited”, arXiv:quant-ph/0306072 (June 2003), an updated version of the article in Physics Today 44, 36-44 (Oct 1991).

The border between the classical and the quantum worlds. Drawing by W. H. Zurek, re-blogged in “Reading in the dark” (St. Hilda’s underground book club).

Zurek compares classical physics to quantum mechanics via the measurements in both. An example is given by a gravitational wave detector, which uses the indeterminate force on a mirror from the momentum transfer of photons. In quantum mechanics, one is in need of an amplifier to measure quantum effects because they usually occure on tiny scales: this is what N. Bohr asks for when crossing the borderline.

But this border between the macroscopic and microscopic is undefined itself. Often it is a matter of the needed precision which leads to the definition of this line. It is also related to the simplifications we make. The many worlds theory goes so far to push it back into the conciousness of the observer: to auote Zurek, this is notably an “unpleasant location to do physics”.

Decoherence is the loss of the ability to interfere. It happens because of the exchange of information and energy between a system and its environment. Zurek especially considers quantum-chaotic systems, so first of all we need to define what a chaotic system is. A chaotic system is characterised by its strong dependence on initial conditions and on perturbations. An example for this is the “baker’s map” illustrated below: an area gets stretched and compressed and then folded back together. This leads, after a few iterations, to a complete disruption of initial structures.

Visualization of the baker’s map, inspired from a figure in S. Strogatz, Nonlinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry, and Engineering (Westview Press 1994).

For a quantum system this means that an initial distribution in phase-space gets folded and stretched such that after a certain time it exhibits fine structures. The Wigner function changes its sign and this leads to interference. Now Zurek argues that friction destroys these structures. This means that the system can no longer show interference.

Note: this way of thinking about quantum chaos cannot be fully compared to classical mechanics, because chaos doesn’t exist in the typical sense here. If two wave functions overlap, they will always overlap, since the unitary Hamiltonian conserves such geometric properties.

Assume is the distance between the maxima in the phase space. Then the period of the fine structure is proportional to

and

We can now look at the friction and the resulting diffusion by using the differential equation for Brownian motion. It has the same structure as the equation for heat diffusion:

So by plugging in we get:

and this can easily be solved as , where

. This is a typical solution for a system under the influence of friction and

gives a characteristic timescale or, equivalently,

gives us a rate. We will later see, how big this scale really is for a macroscopic system, but first we need to find an estimate for

. This is provided by the Einstein relation between friction and diffusion. Friction leads to a loss of kinetic energy, so for a friction coefficient

we have

where . But at the same time the kinetic energy increases because of the diffusion, so

For the system to be at equilibrium, both processes have to be equal, so

But in equilibrium, we also have

where is the temperature. This leads to

and for ,

and

, so for a small macroscopic object we get

. This timescale is an extremely short one, so short that we cannot even begin to think about measuring it at the moment.

Schrödinger’s cat and Wigner’s infinite regression

Let’s take a step back and look at Schrödingers cat again, where nuclear decay determines the state of the cat. The atom is in a superposition of states of being decayed and not being decayed, the Geiger counter is also in a superposition, namely of detecting an event and not detecting one and so on. Now where do we draw the line of thinking about superposition, the cat? Or the observer? Or even the Universe? This is Wigner’s infinite regression. It seems obvious, that a good theory needs to break up this line of reasoning somewhere. So it has to properly define the border between micro and macro.

Example: Schrödinger’s cat

The cat is seemingly in a superposition of states . First of all, this is a wrong state, since the cat is a composite system. But if we assume we could write the state that way, then decoherence leads to an increase in the entropy, because it will remove the superposition (passage from a pure state to a statistical mixture).

Example: oscillator at temperature

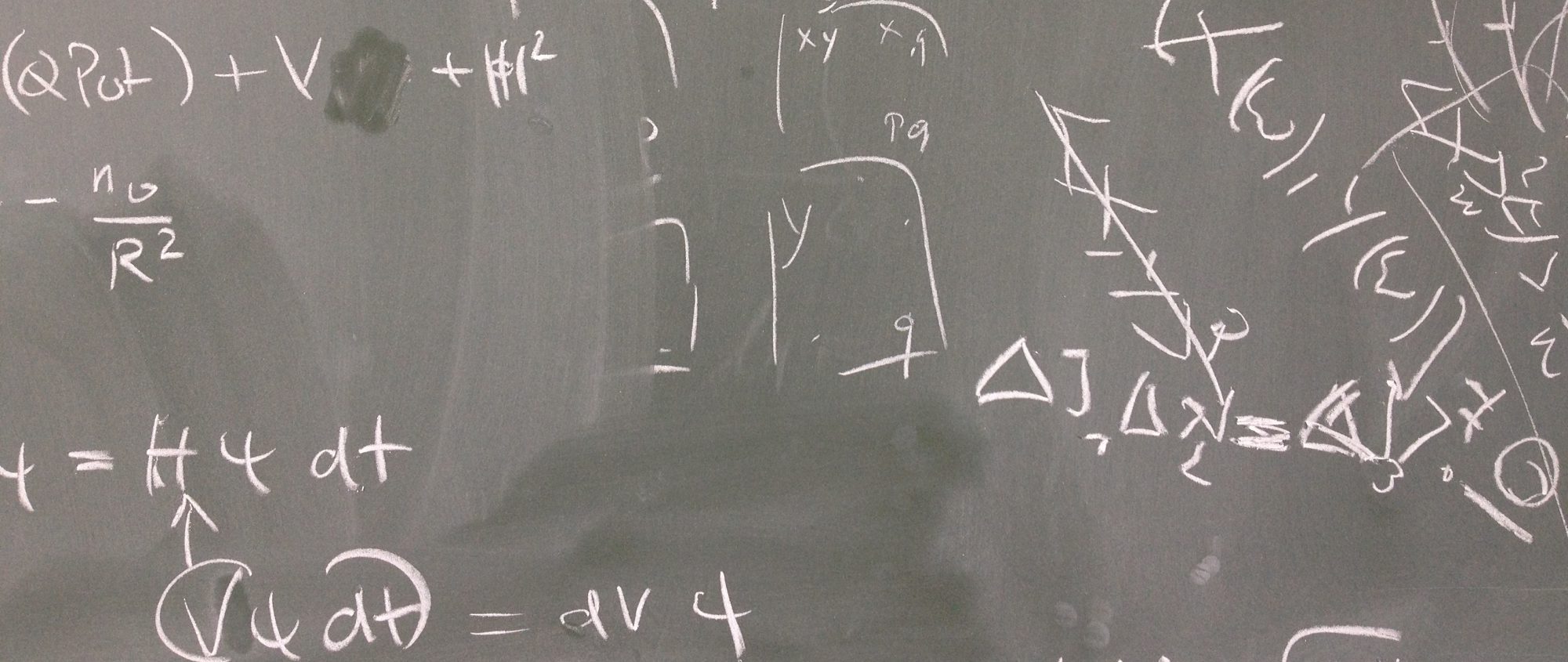

Hamiltonian

spring constant and oscillator frequency

Wigner function of thermal equilibrium state

So here we have an uncertainty product

larger than the minimum allowed by the Heisenberg uncertainty principle.

3 Replies to “Decoherence”